There is a large amount of effort spent in the networking community in an attempt to minimize latency. It would be really great if the data I transfer could take a geographically efficient route, and if the things I ask for get sent back. It turns out that reality lags a bit behind this ideal.

Geographic Efficiency.

There is an ultimatum that it will take at least 67ms for my traffic to get to the other side of the world – namely the speed of light. If that was true, we would be in great shape. In reality, it generally takes 200 to 250ms to go around the world, and if you want to get a response that means waiting 400-500ms. There are two reasons for inefficiency. First, data is not going to travel on the shortest path to the destination – there isn’t a wire on that path. Instead it will travel through the various autonomous systems which form the Internet. These systems are connected in an economically driven way, which compete over choosing the true shortest path. Second, there isn’t a single wire connection you and the destination. Instead, there are many (expect 10-15 for continental US, more for other continents) routers that the data will pass through. Each router need to figure out which of the many wires connected to it my data needs to be sent out on. To do this, they actually have to spend the time to read the data, check that it has a valid checksum and is ‘intact’, and then put it in queue after any previously routed data to the outgoing wire. A substantial fraction of transfer time is spent on routers, rather than on the wire.

Technical Efficiency.

Applications are built on top of this network, and have to cope with delays that are noticeable by users each time data needs to be transferred. How do they do it? The first realization is that there are a couple common types of ways that data will need to be transferred. One is in ‘near realtime’, with examples like phone calls, video chats, and the like. For this, there is a UDP protocol, which offers best-effort delivery – you bundle your data into small bundles and send them out. There is no guarantee of if or when they’ll get to the other side – okay, because if there is a problem it isn’t worth waiting for that data to show up much later, the damage has already been done. On the flip side, the other common protocol is TCP, which guarantees the reconstruction of a data stream. The potential downside in this conversation is that a tcp connection has an initiation phase. This takes 1 round trip (you send a request, the destination acknowledges it, and then you can begin sending data). Tick off 400ms if you were trying to get somewhere in europe or asia.

Once a connection is made, you have to actually request the web page you want. This is done using the HTTP protocol. The main cost that is worth noting with HTTP is that it allows fetching of individual resources. If I want the page, I ask for it, and the server responds. In the response, there may be a reference to an image. I will need to the request that image, and get it in a response – the protocol doesn’t let the server send me additional resources until I ask for them. (Why, you might ask, would you make pages composed of multiple resource if you care about speed? The answer seems to be that I might already have some of those resources saved in a local cache, and won’t necessarily need to request them from you.) The upshot is that at best there are two additional rounds of communication required to get all the resources together to actually show a web page.

Reality.

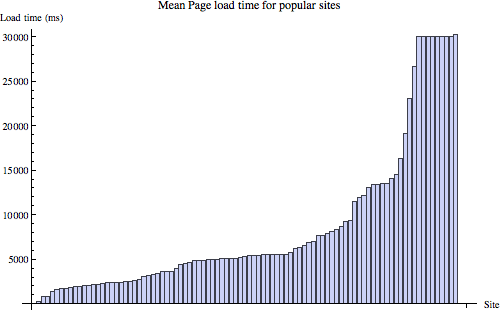

This chart shows averages for how long it took to load each of 100 different web sites. The requests were made from planet lab, a geographically-diverse set of university computers (which are not terribly reliable.). Ignore the tails, which are products of requests being banned. This is indicating that for an average (around the world, not average in the US) user, it takes 3-5 seconds to load a web page. That’s horrible!